Prometheus是一个开源系统监控和警报工具包,最初由 SoundCloud构建。自 2012 年启动以来,许多公司和组织都采用了 Prometheus,该项目拥有非常活跃的开发者和用户社区。它现在是一个独立的开源项目,独立于任何公司进行维护。为了强调这一点,并明确项目的治理结构,Prometheus 于 2016 年作为继Kubernetes之后的第二个托管项目加入了云原生计算基金会。

监控方案

Cadvisor + node-exporter + prometheus + grafana

- Cadvisor:数据采集

- node-exporter:汇总

- prometheus:处理、存储

- grafana:展示

监控流程

- 容器监控:Prometheus使用cadvisor采集容器监控指标,而

cadvisor集成在K8S的kubelet中所以无需部署,通过Prometheus进程存储,使用grafana进行展示。 - node节点监控:node端的监控通过

node_exporter采集当前主机的资源,通过Prometheus进程存储,最后使用grafana进行展示。 - master节点监控:master的监控通过

kube-state-metrics插件从K8S获取到apiserver的相关数据并通过网页页面暴露出来,然后通过Prometheus进程存储,最后使用grafana进行展示

Kubernetes监控指标

K8S自身监控指标

- node资源利用率:监控node节点上的cpu、内存、硬盘等硬件资源

- node数量:监控node数量与资源利用率、业务负载的比例情况,对成本、资源扩展进行评估

- pod数量:监控当负载到一定程度时,node与pod的数量。评估负载到哪个阶段,大约需要多少服务器,以及每个pod的资源占用率,然后进行整体评估

- 资源对象状态:在K8S运行过程中,会创建很多pod、控制器、任务等,这些内容都是由K8S中的资源对象进行维护,所以可以对资源对象进行监控,获取资源对象的状态

Pod的监控

- 每个项目中pod的数量:分别监控正常、有问题的pod数量

- 容器资源利用率:统计当前pod的资源利用率,统计pod中的容器资源利用率,结合cpu、网络、内存进行评估

- 应用程序:监控项目中程序的自身情况,例如并发、请求响应等

| 监控指标 | 具体实现 | 案例 |

|---|---|---|

| Pod性能 | cadvisor | 容器的CPU、内存利用率 |

| Node性能 | node-exporter | node节点的CPU、内存利用率 |

| K8S资源对象 | kube-state-metrics | pod、deployment、service |

服务发现

从k8s的api中发现抓取的目标,并且始终与k8s集群状态保持一致。动态获取被抓取的目标,实时从api中获取当前状态是否存在,此过程为服务发现。

自动发现支持的组件:

- node:自动发现集群中的node节点

- pod:自动发现运行的容器和端口

- service:自动发现创建的serviceIP、端口

- endpoints:自动发现pod中的容器

- ingress:自动发现创建的访问入口和规则

Prometheus监控Kubernetes部署实践

部署准备

案例仅在master节点pull image

1 | [root@master ~]# git clone https://github.com/redhatxl/k8s-prometheus-grafana.git #这个仓库的yaml有几个错误,在本文章末尾已经改过来了,可以直接使用末尾的yaml文件 |

采用daemonset方式部署node-exporter

1 | [root@master ~]# cd k8s-prometheus-grafana/ |

1 | [root@master k8s-prometheus-grafana]# cat node-exporter.yaml |

1 | [root@master k8s-prometheus-grafana]# kubectl apply -f node-exporter.yaml |

部署Prometheus

1 | [root@master k8s-prometheus-grafana]# cd prometheus/ |

部署grafana

1 | [root@master prometheus]# cd ../grafana/ |

校验测试

1)查看pod/svc信息

1 | [root@master grafana]# kubectl get pods -A |

2)查看页面

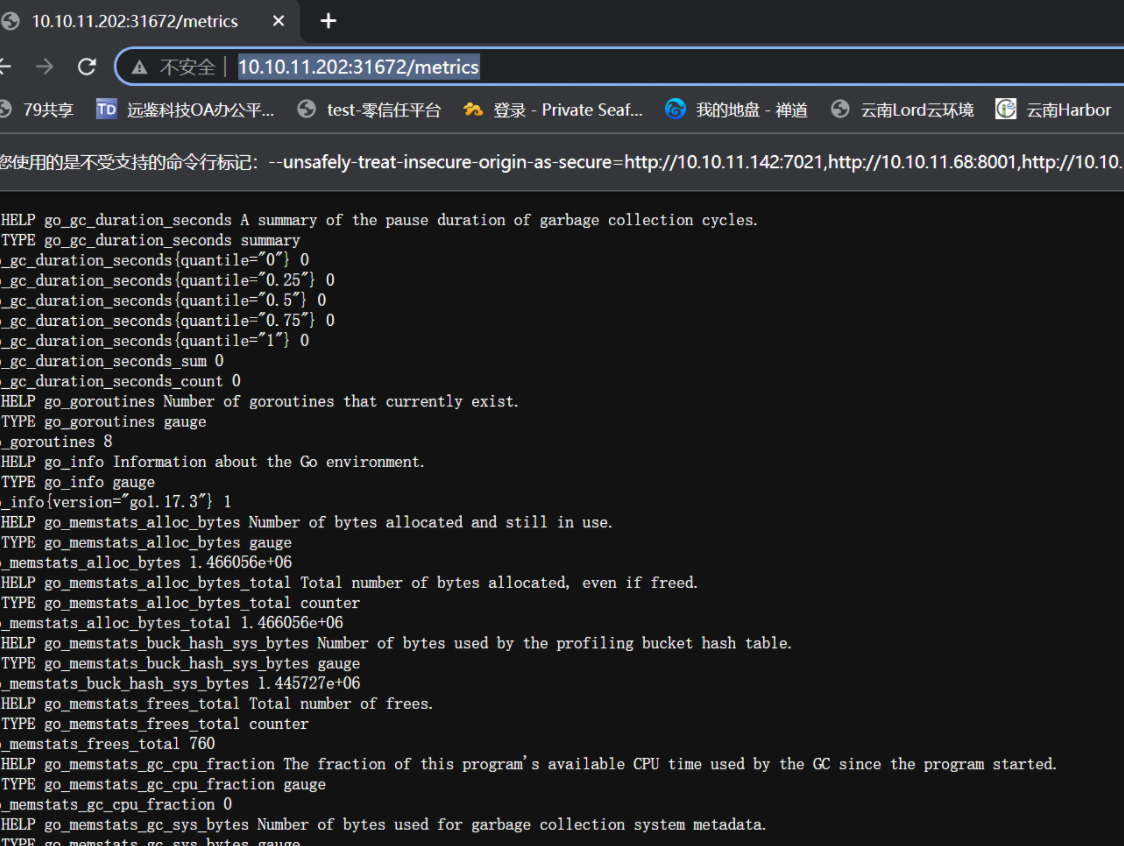

访问http://10.10.11.202:31672/metrics,这是node-exporter采集的数据。

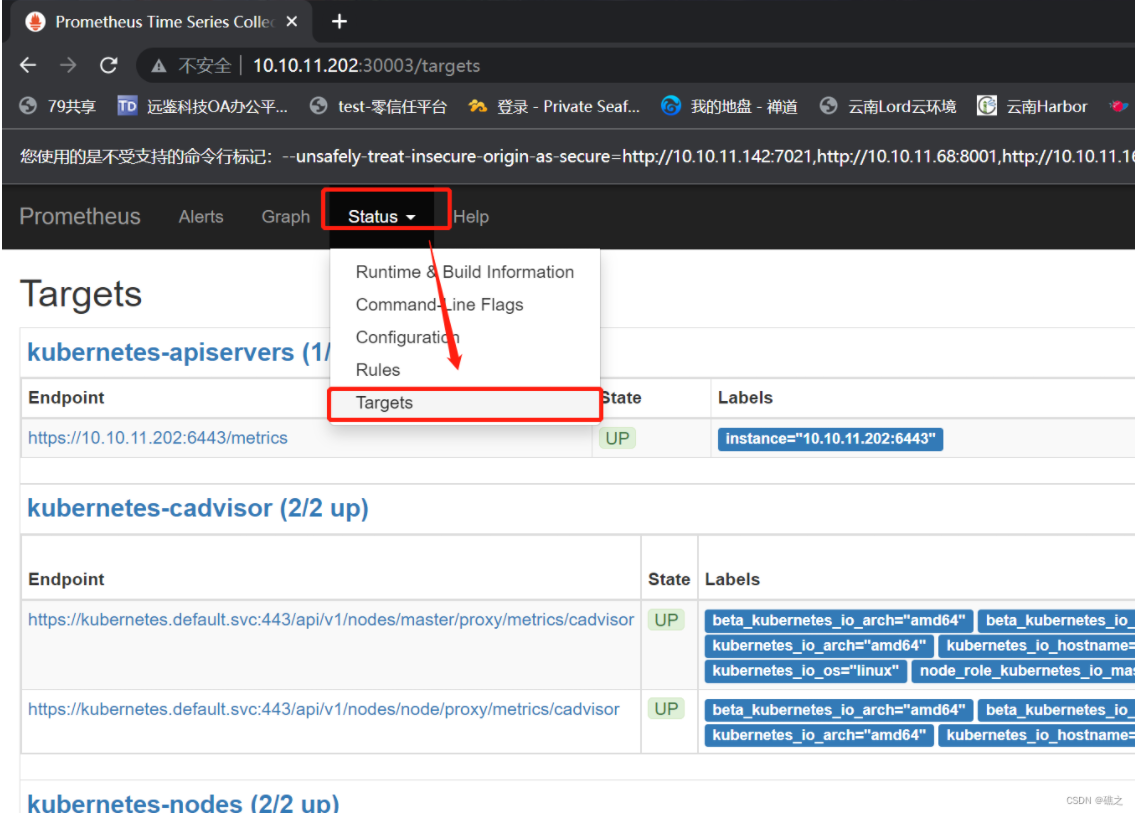

访问http://10.10.11.202:30003,这是Prometheus的页面,依次点击Status>Targets可以看到已经成功连接到k8s的apiserver。

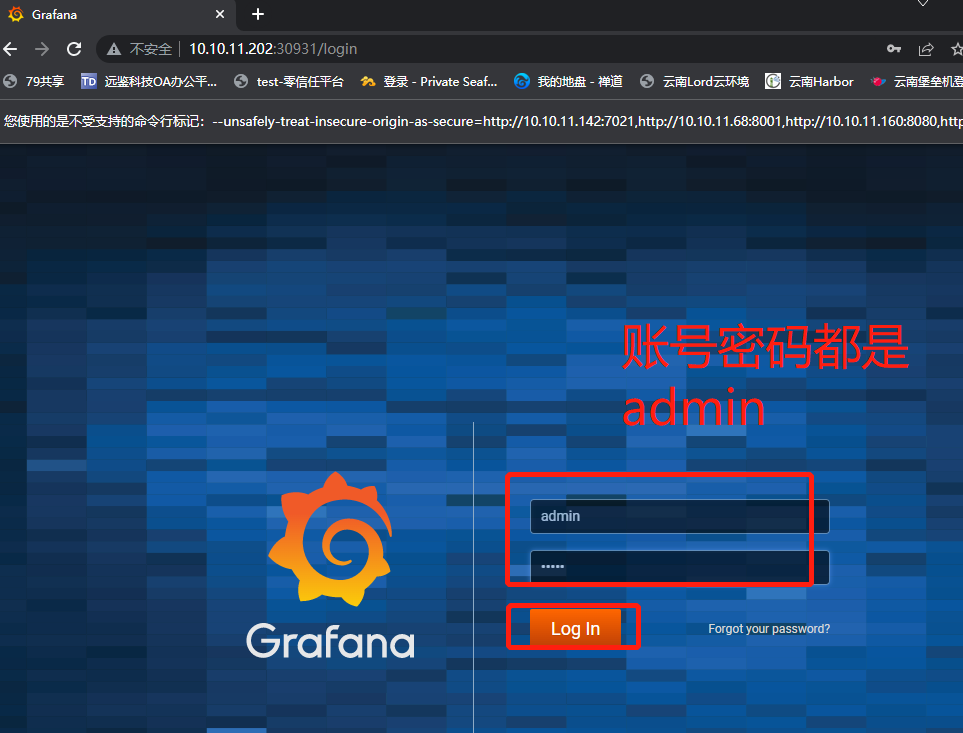

访问http://10.10.11.202:30931,这是grafana的页面,账户、密码都是admin。

grafana模版配置

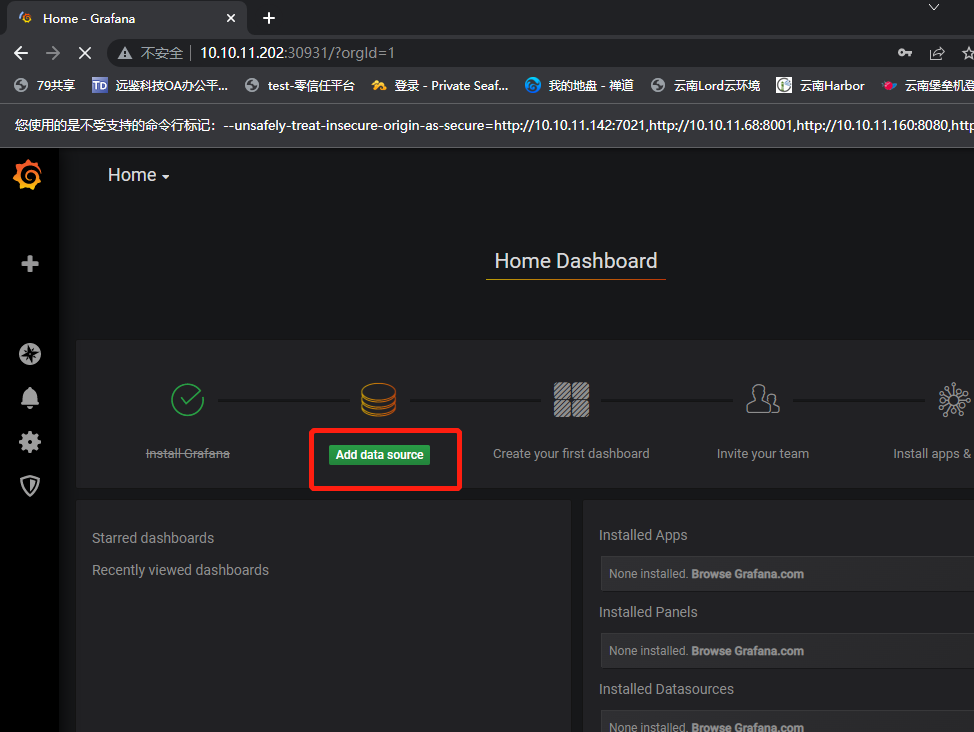

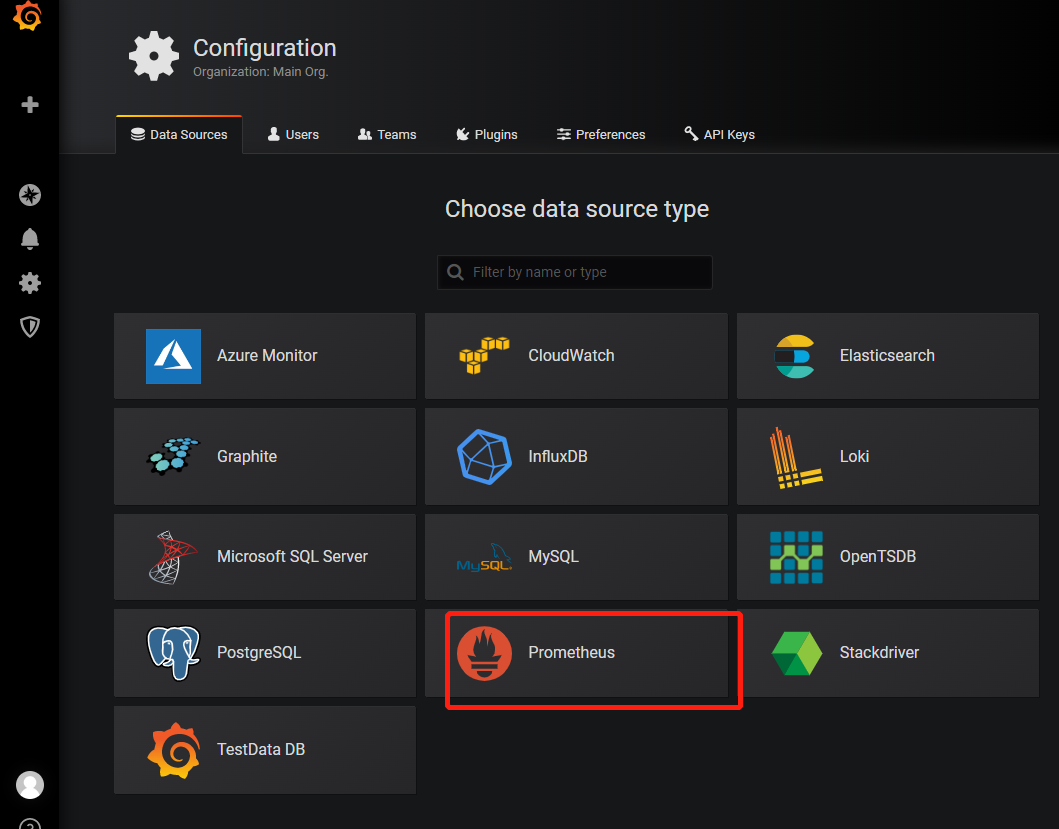

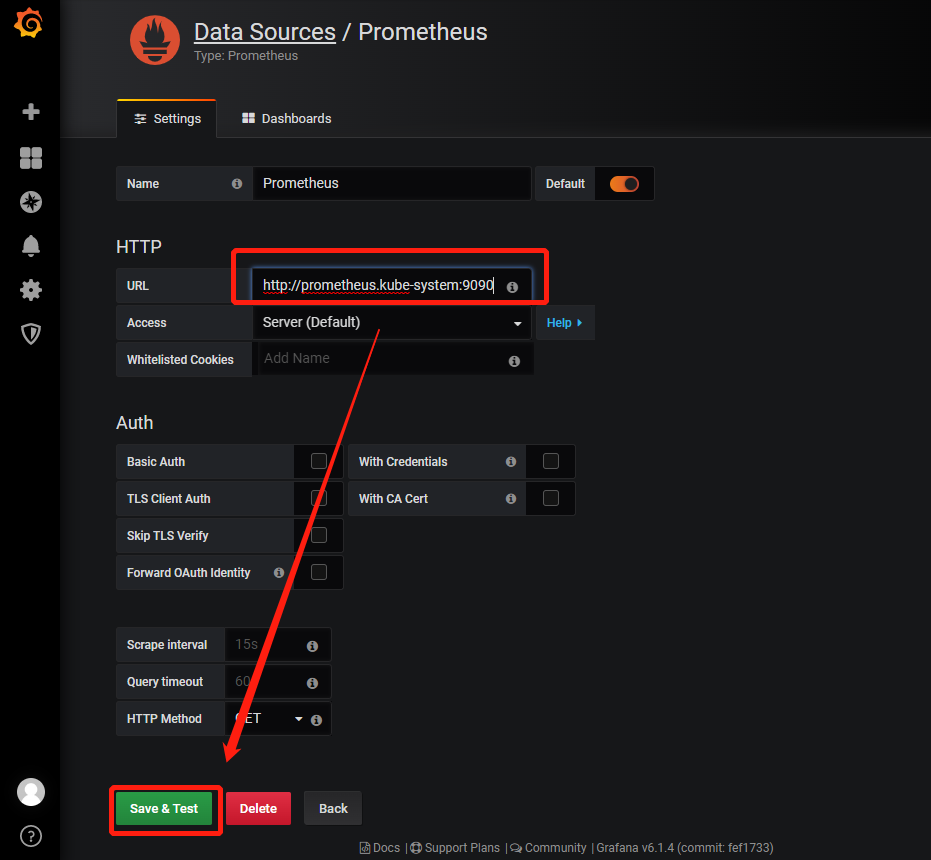

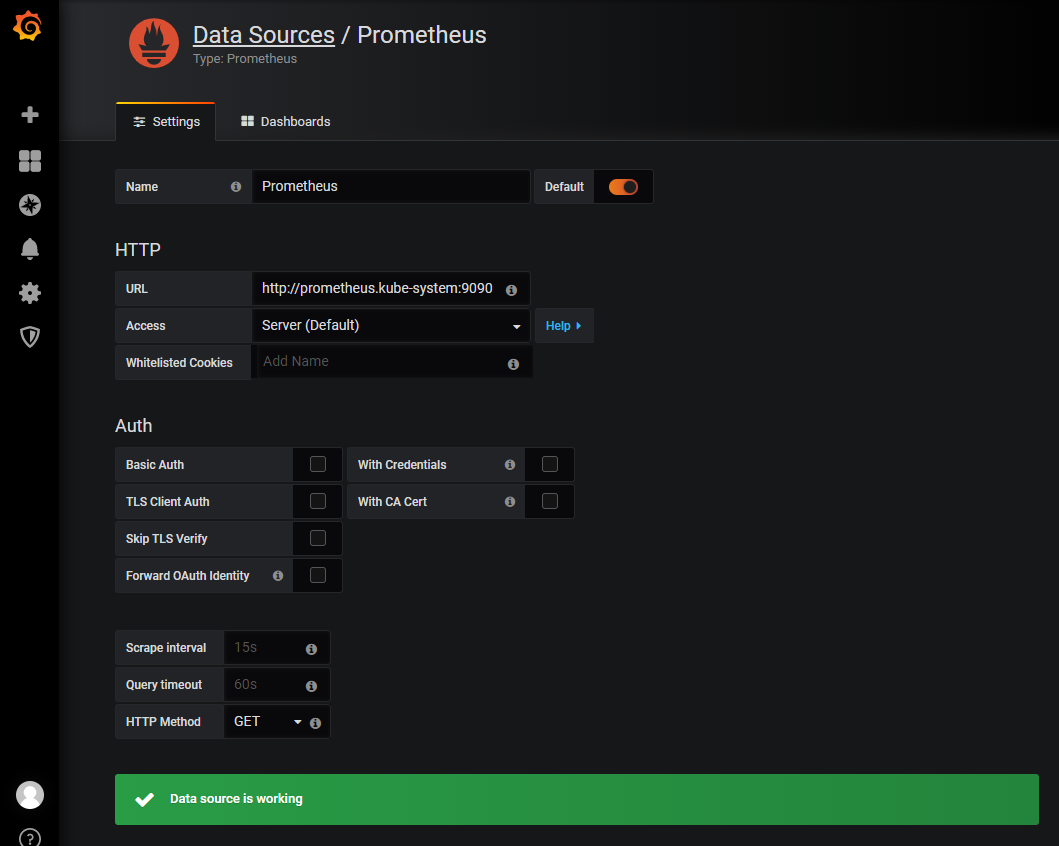

1)添加数据源,点击add,点击Prometheus

2)依次进行设置,这里的URL需要注意:

URL需要写成,service.namespace:port 的格式,例如:

1 | [root@master grafana]# kubectl get svc -A |

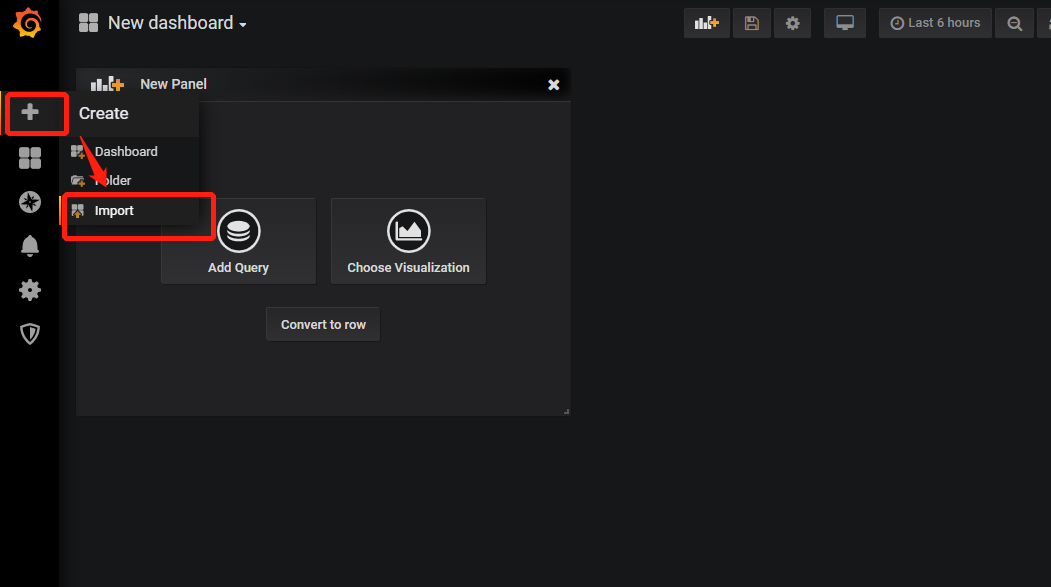

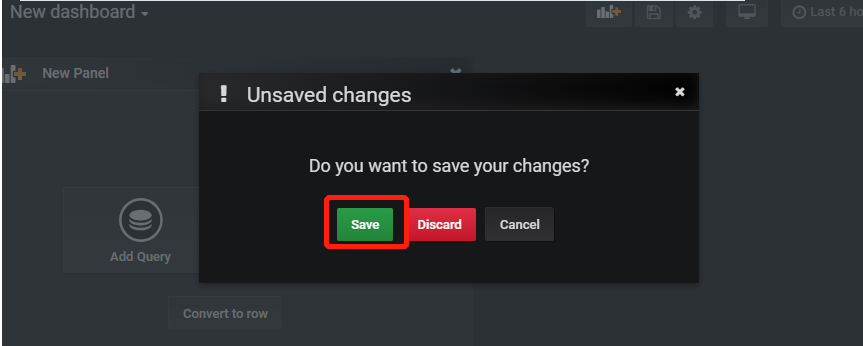

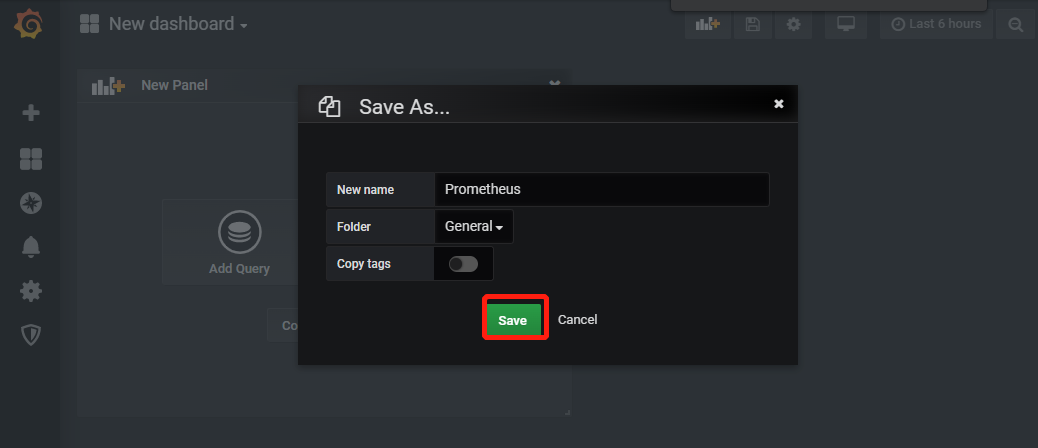

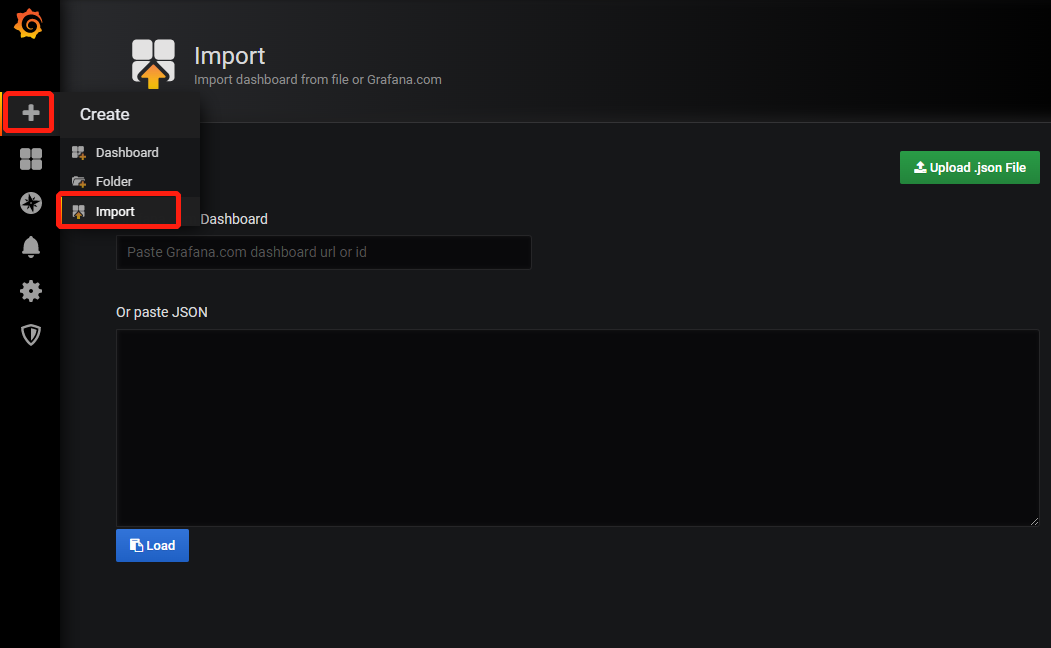

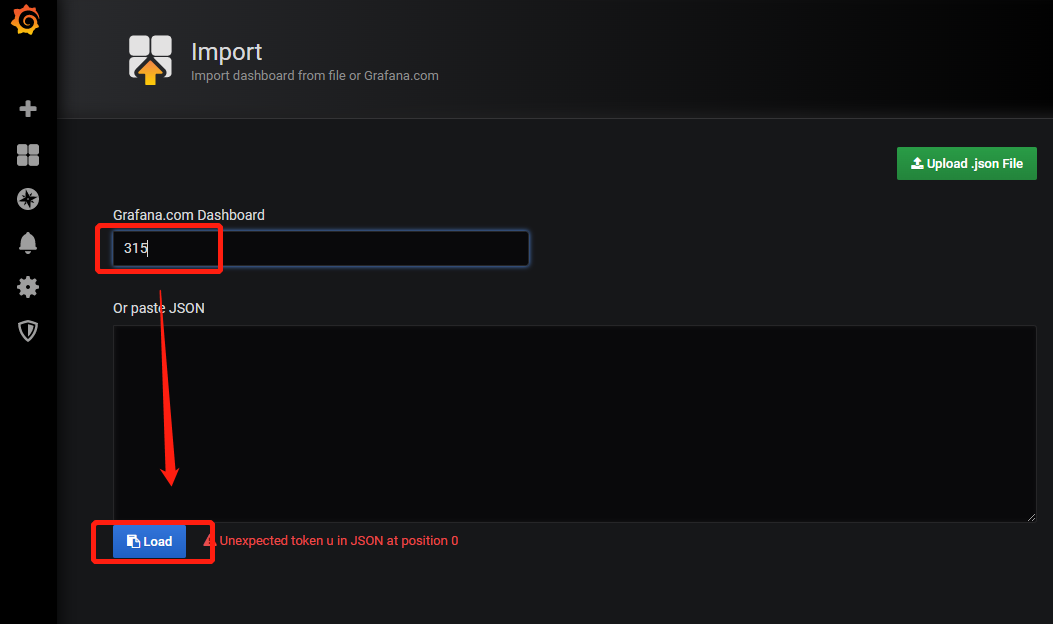

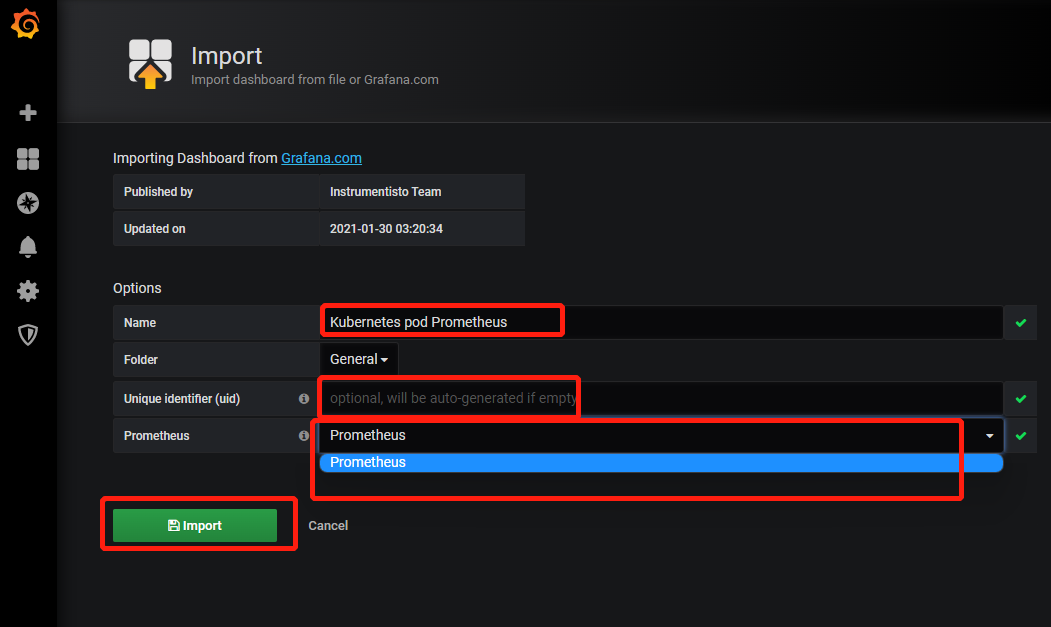

3)导入K8S Dashboard模板

4)name自定义,uid可以为空,会自己生成,然后选择数据源,选择刚才创建的Prometheus,最后点击import

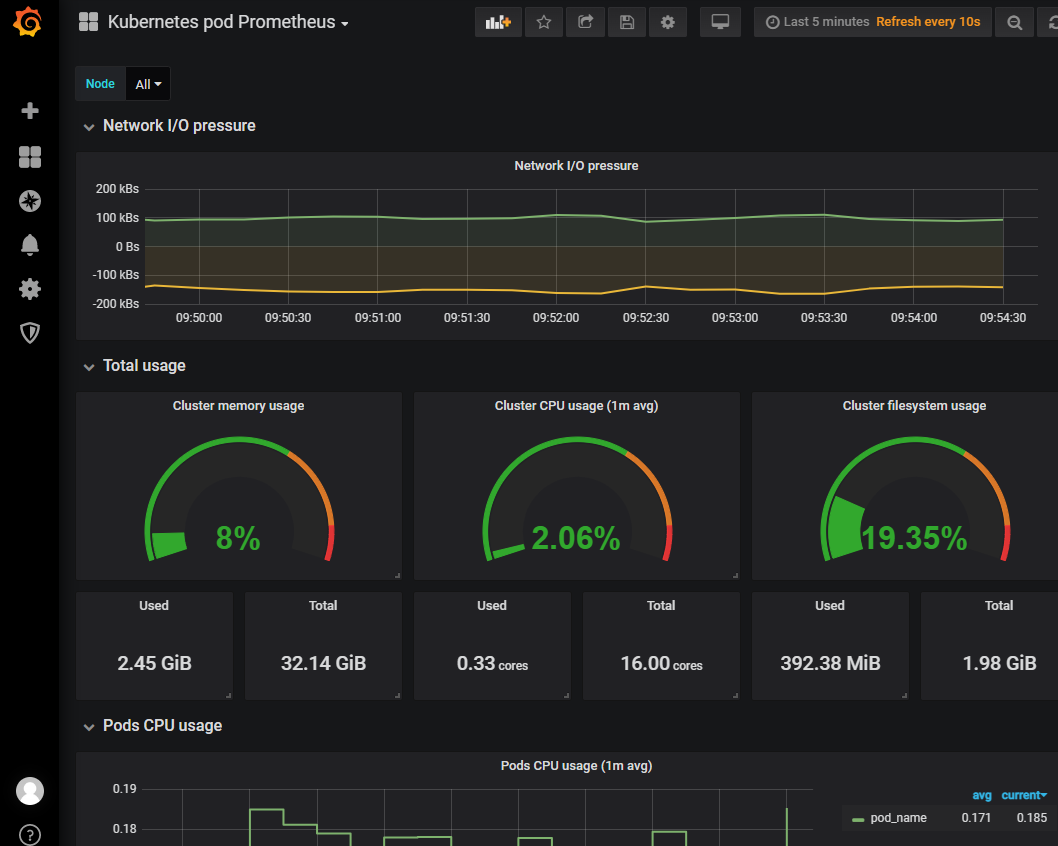

5)效果图展示

yaml配置文件

node-exporter.yaml

1 | --- |

rbac-setup.yaml

1 | apiVersion: rbac.authorization.k8s.io/v1 |

configmap.yaml

1 | apiVersion: v1 |

prometheus.deploy.yml

1 | --- |

prometheus.svc.yml

1 | --- |

grafana-deploy.yaml

1 | apiVersion: apps/v1 |

grafana-svc.yaml

1 | apiVersion: v1 |

grafana-svc.yaml

1 | apiVersion: extensions/v1beta1 |